The AI resume screening failure unfolding in 2026 is quietly reshaping hiring—and not for the better. Candidates with strong experience, relevant skills, and proven results are being filtered out before a human ever sees their profile. Meanwhile, less-qualified resumes optimized for keywords sail through.

This isn’t speculation. Recruiters, hiring managers, and job seekers are all reporting the same thing: the smarter the automation got, the worse the outcomes became.

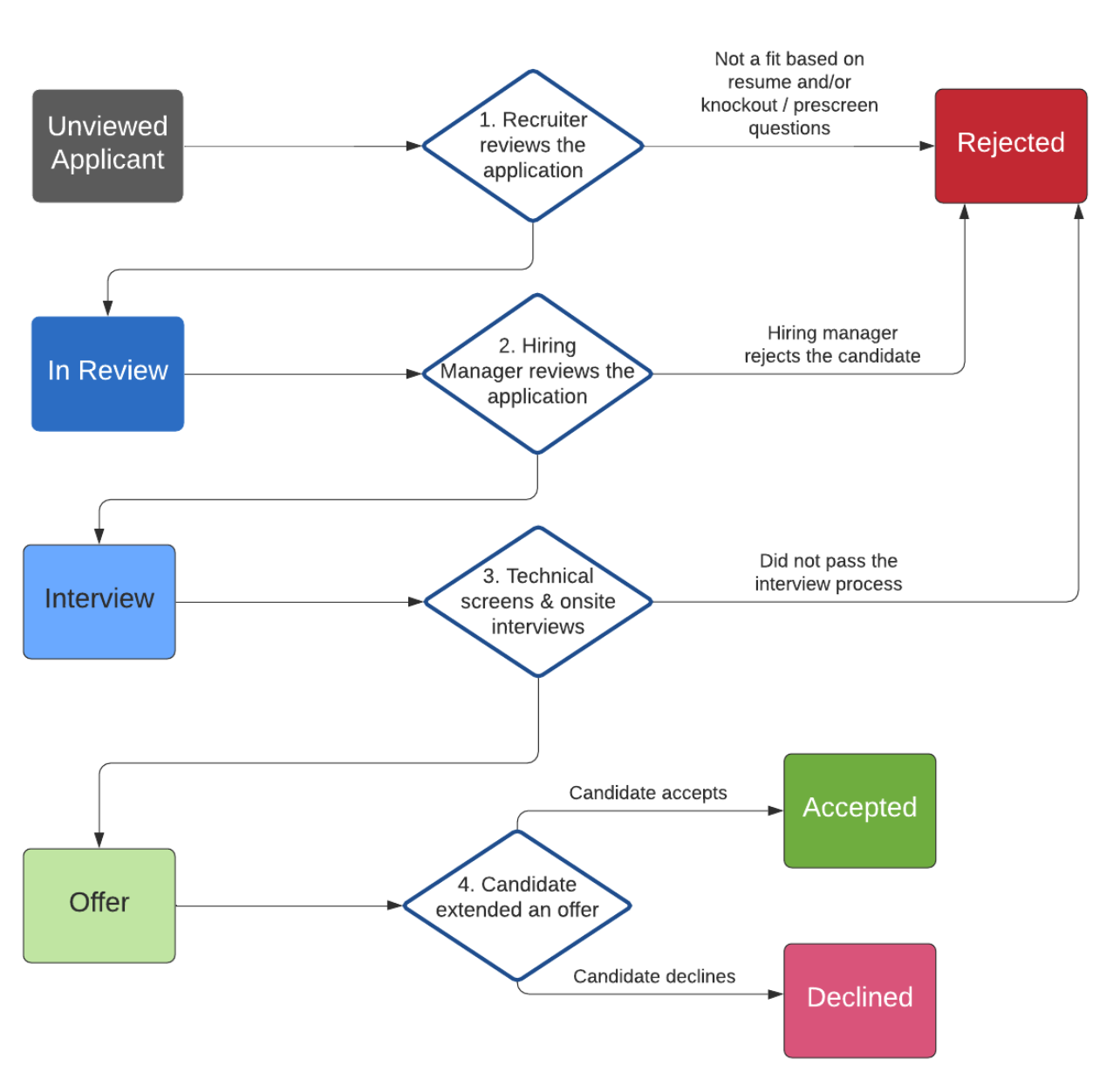

How AI Resume Screening Is Supposed to Work

In theory, hiring automation exists to help—not harm.

The intended goals:

• Reduce recruiter workload

• Filter large applicant volumes

• Identify role-relevant skills

• Speed up hiring decisions

But the AI resume screening failure emerges when efficiency replaces judgment.

Why Qualified Candidates Are Being Rejected First

The biggest irony: experience can become a liability.

Common rejection triggers include:

• Non-standard career paths

• Transferable skills phrased differently

• Older job titles not matching templates

• Industry-specific language AI doesn’t recognize

AI doesn’t understand context—it understands patterns. And real careers rarely follow templates.

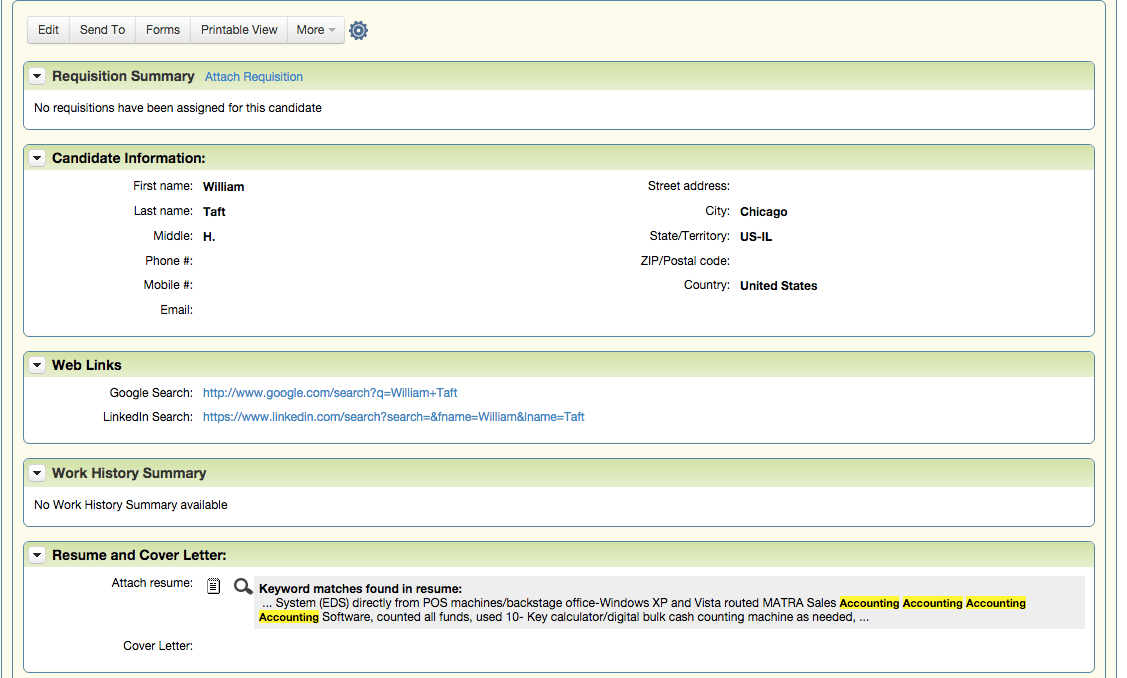

Keyword Matching Is Breaking Hiring

Most AI screeners rely heavily on keyword scoring.

Most AI screeners rely heavily on keyword scoring.

What goes wrong:

• Candidates stuff resumes unnaturally

• Genuine experience phrased creatively scores lower

• Soft skills are ignored entirely

• Depth loses to repetition

This is the core AI resume screening failure—the system rewards formatting, not competence.

Why ATS Systems Penalize Career Gaps and Switches

AI systems are uncomfortable with human reality.

They often penalize:

• Career breaks

• Freelancing or contract work

• Industry switches

• Portfolio-based careers

What humans see as adaptability, AI flags as risk.

Recruiters Know the System Is Broken—but Still Use It

Here’s the uncomfortable truth: recruiters don’t fully trust AI screeners either.

But they rely on them because:

• Application volumes are unmanageable

• Time pressure is constant

• Manual review isn’t scalable

• Hiring teams are understaffed

The AI resume screening failure persists because there’s no easy alternative.

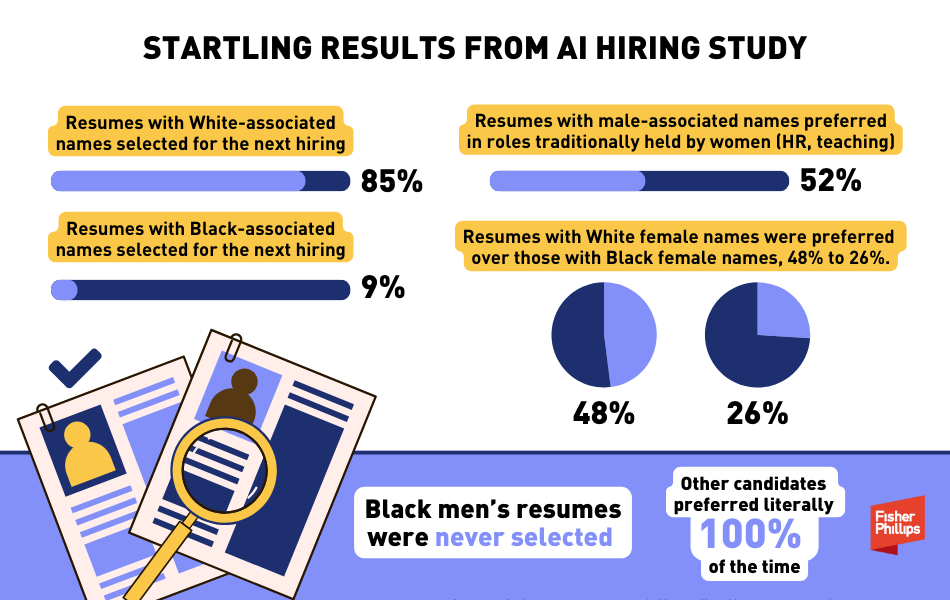

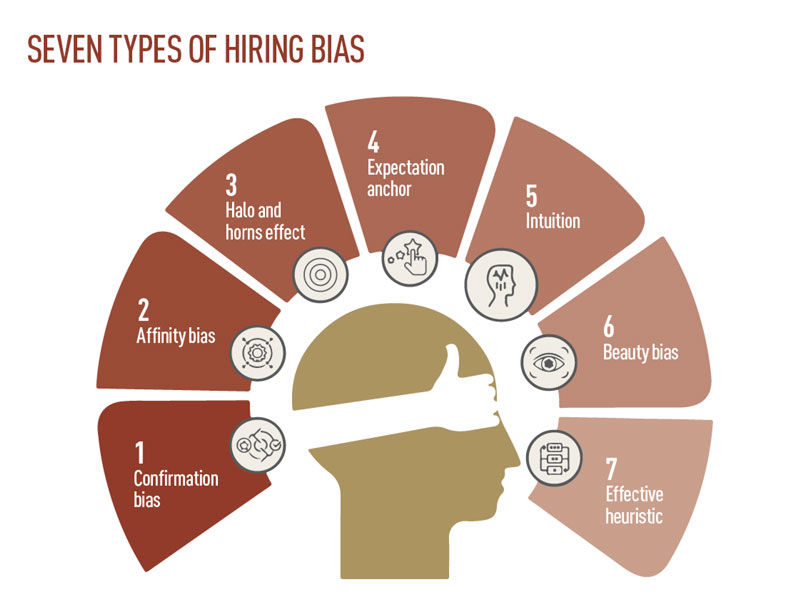

How Bias Sneaks In Through Automation

AI doesn’t remove bias—it can amplify it.

Bias enters through:

• Historical hiring data

• Skewed training sets

• Proxy variables (schools, titles, gaps)

When biased data trains the system, rejection patterns repeat silently.

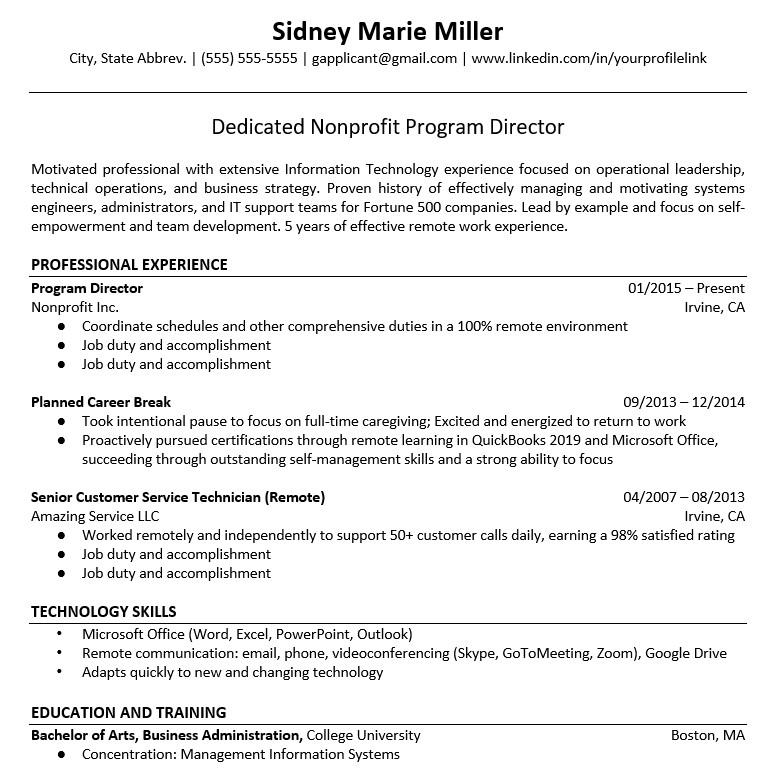

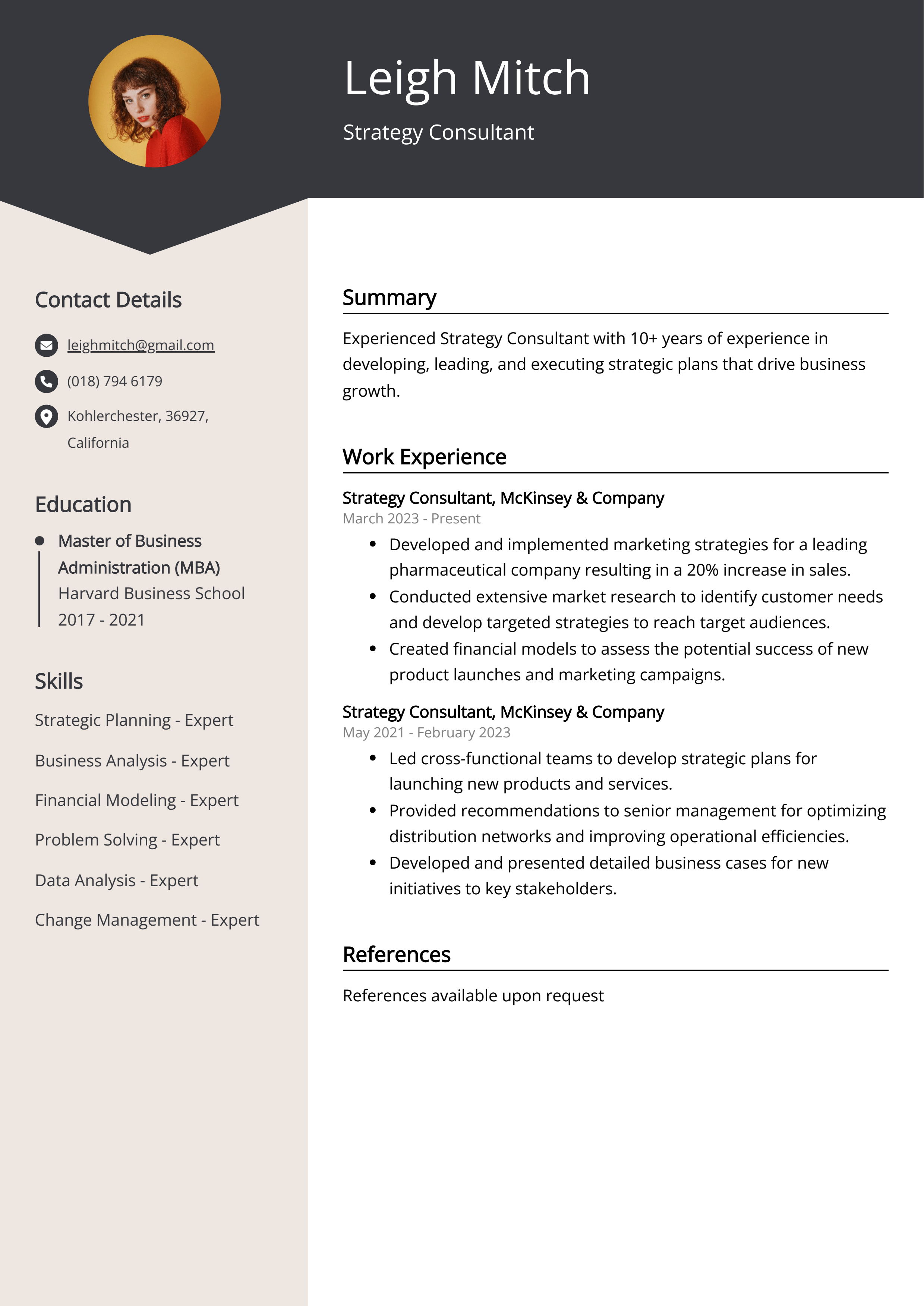

What Job Seekers Are Doing to Survive the System

Candidates are adapting—not happily, but strategically.

Candidates are adapting—not happily, but strategically.

Survival tactics include:

• Creating multiple resume versions

• Reverse-engineering job descriptions

• Removing unconventional experience

• Optimizing for ATS, not honesty

The AI resume screening failure forces candidates to game the system instead of showcasing value.

Why This Is a Problem for Employers Too

This isn’t just hurting applicants—it’s hurting companies.

Employers face:

• Missed high-quality candidates

• Homogeneous teams

• Slower innovation

• Higher long-term turnover

Automation filters out exactly the diversity and depth companies claim to want.

What Better Hiring Automation Would Actually Look Like

Fixing the AI resume screening failure doesn’t mean abandoning AI—it means rebalancing it.

What works better:

• AI as assistive, not gatekeeper

• Human review checkpoints

• Skill-based assessments over keywords

• Transparency in screening criteria

Hiring should filter noise—not talent.

Conclusion

The AI resume screening failure of 2026 exposes a hard truth: efficiency without understanding creates exclusion. Automated filters aren’t neutral—they shape who gets a chance. And right now, they’re rejecting some of the best candidates first.

Until hiring systems value context, adaptability, and real experience, both job seekers and employers will keep losing. Technology was supposed to fix hiring. Instead, it broke the first door.

FAQs

Why are AI resume screeners rejecting qualified candidates?

Because they rely on rigid keyword patterns instead of contextual understanding.

Can optimizing a resume for ATS help?

Yes, but it often forces candidates to oversimplify or distort their experience.

Do recruiters trust AI screening tools?

Many don’t—but they depend on them due to application volume.

Is AI hiring biased?

It can be, especially when trained on biased historical data.

What’s the best solution to AI resume screening failure?

Using AI as a support tool with human oversight—not as the final decision-maker.

Click here to know more.